If you are running Openfire 3.6.4, I suggest you disable PEP. It has a memory leak and could affect your testing.

Thanks, but I’ve upgraded to 3.7.1 in the meantime. I haven’t noticed leaks while testing with 3.6.4, memory always stayed within the same range. But maybe I wasn’t stressing it enough.

It is unlikely that your tests are actually using PEP.

Do not assume I do not have an entire battery of tests lined up just for pubsub

It’s just with only 4,000 users, there may not have been much stress going on.

Then PEP will almost certainly give you grief when you get there.

Pubsub, well, that depends on if you are using persistent nodes or not. Persistent nodes will leak memory, prior to the unreleased version 3.7.2.

I would be very curious as to other potential issues though, such as large number of subscribers. There are definitely some bottlenecks there as well, but I would be curious to see what the current performance is like.

Good luck!

Ok, the answer seems to be: at least 200,000 on an Amazon large server.

You have to tweak OF’s caches and you only get acceptable performance when all users are cached - meaning that if the server crashes and many users will try to reconnect, you’re basically screwed. DB must also be up to speed, in my case tweaking postgresql helped boost performance.

Fwiw, the default configuration was only good for 4,000 users, it may be a good idea to offer more sensible defaults out of the box.

Alex, thanks for providing this information. I am sure many us will find this very useful.

I have maked your question as answered. Could we ask a big favour please?

Could you at any spare moment document the tweaks you carried out to get from 4,000 to 200,000. This would help to change the default values baked into the code.

My cache settings are:

- cache.username2roster.size: 12000000

- cache.group.size: 40000000

- cache.userCache.size: 35000000

- cache.lastActivity.size: 1500000

- cache.offlinePresence.size: 1500000

The values are not fine tuned, but they’re good enough so that cache culling happens every few minutes instead of tens or hundreds of times a second - that was really killing performance. I have only used users with no rosters. Once rosters come into play, additional caches might need adjusting (also the number of connections might take a nose-dive).

I don’t know if you want the default caches to be good enough for 200,000 users, that may waste a lot of memory for smaller instalations. I think the nicest approach would be to document something like: “you need roughly X bytes for cache Y for each 10,000 users”. Then provide defaults good enough for 25,000, maybe 50,000 users and make that clear in the documentation. I won’t have time to make those measurements and you probably won’t either, but I think you can derive something from the values above. Should any other caches need adjusting, I’ll try to post updates here. Alternatively, you could just document that admins should watch out for cache pruning and ensure they don’t happen too often. That would be the easy way out.

I have increased the number of DB connections from 25 to 100, but I can’t say whether that helped any as I haven’t tested the change in isolation.

It may also be worth documenting that cache sizes are measured in bytes. I assumed they were measured in entries, that was quite a head-scratcher.

Top marks to you Alex . Thanks.

I forgot to metion at some point I had to increase the heap size, but that’s a pretty common trick and quite obvious when you have to do it.

Alex,

I have grouped everyone in organization to their respective department so that colleagues can just

find others by going to the displayed department. So there are about 80 groups in every user’s roster.

I have been adjusting the figures of the cache and maxLifetime setting but do not seems to get it right. When many users login during peak time, server seems to respond slow, although the login will be successful eventually.

Can you share the maxLifetime cache setting or any other that you think would have impact as well?

Thanks for the good postings.

I have yet to test users actually having contacts. I have used the default lifetime which is ‘never expire’ I believe.

But the catch is users have to be cached beforehand. When users are not cached and there are many incoming connections, the performance is poor. I had authentication requests that took over an hour to complete (this was a stress test, normal usage shouldn’t reach that high).

Hi Alex,

Regarding your “Correct Answer”.

What do you mean all users are cached. I need a seamless experience for my end-user. I mean even if Openfire crashes, Clustering or some other fault-tolerant technology, should help me achieve a seamless experience for the end-user.

i.e He shoudls still be able to do IM without realizing that a crash or something wrong has happened.

Is this possible with 200,000 users.

Your answer says 200,000 users but i would be happy with atleast 20,000-50,000 users as long as Openfire is stable. Then its just a question of deploying more servers for more users.

Thanks

Vinu

I mean, to get good performance I had to do a first pass and log in each and every user in order for them to get cached. If Openfire has to read users from DB, the performance drops sharply. I tested this with a single server using PostgreSQL, ymmv.

Clustering will help by caching sessions and such, but there are clients (like Apple’s iMessage - or whatever it’s called) that just won’t do SRV lookups. These will have no option, but to log in again. Unless you’re prepared to work some low-level networking magic.

I hope this clears things up a bit for you,

Alex

I am still very new to XMPP and OpenFire and even moderately familiar with Java. So I dont understand all the terminologies. Hope to get there in the next few weeks.

Can you explain what you mean by low-level netowrking magic. Are you talking about BSD socket programming at a TCP/IP level. What kind of networking magic are you talking about?

I heard that a company called Nimbuzz uses OpenFire and they claim to have 150 million users. So I dont know how they are handling such a large user base with OpenFire.

I mean, if the client desn’t do DNS SRV lookups, it will use an IP to connect. If you want the server to change, but at the same time the client to continue using the same IP, you’ll need a solution that’s lower level than IP. I have no idea what that solution would look like.

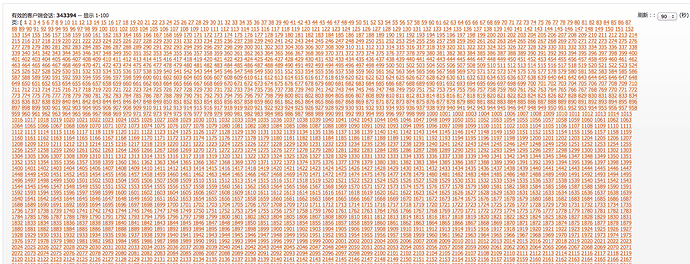

At last we get more than 300k user per server.

the server is Xeon E5-2620 X2 ,32GB RAM。

Just tweek ofcaches and the server limits.

Thank you for sharing this especially the openfire screenshot

Hi Zeus,

Thanks for the screenshot, we are running similar tests but couldn’t achieve more than 5k users.

It would help us if you could share the OS tuning parameters and cache sizes in Openfire. Though we’ve gone through this forum to get this info, somehow we are unable to shoot up above 5k.

This could help us verify our settings once again. Below are the existing configurations in our system.

OS Params:

cat /proc/sys/fs/file-max

fs.file-max =102642

open files (-n)65536

ulimit -Hn

65536

ulimit -Sn

65536

Cache Size:

cache.group.size → -1

cache.userCache.size → -1

cache.username2roster.size → -1

cache.vcardCache.size → -1

we tuning the limits.comf as this

-

- nofile 819200 -

- nproc 409600

65535 is too less, it only can support 20k user.

change the openfire.sh as this

openfire_exec_command=“exec $JAVACMD -server $OPENFIRE_OPTS -Xmx30000M -Djava.nio.channels.spi.SelectorProvider=sun.nio.ch.EPollSelectorProvider -classpath “$LOCALCLASSPATH” -jar “$OPENFIRE_LIB/startup.jar””

Xmx as your memory, and use sun nio selector.

Most important the tsung client must be tunning.

change the sysctl.conf

net.ipv4.ip_local_port_range = 1024 65535

close the selinux and iptables

setenforce 0

service iptables stop

change the /etc/security/limits.conf

-

- nofile 409600 -

-

nproc 102400

-

Also the network is important.If you use the Internet, you can consider the route and firewall problem.The homeuse route can only support 5000 user max.

We test the result from LAN.