Am using Openfire 3.9.3. Please let me know which hazelcast version will be compatible with this openfire version.

I use hazelcast1.2.0

get error

com.jivesoftware.util.cache.ClusteredCacheFactory - Failed to execute cluster task within 30 seconds

Am getting 2 exceptions

-

Timeoutexception, It is failing to execute cluster task within 30 seconds.

-

Hazelcastserialiaitonexcepiton, Class not found exception it occurs after 2 hours and 30 minutes and from there it keep on throwing every second.

If someone have fixed this exceptions, please help me to solve this one.

"Failed to execute cluster task within 30 seconds " issue help

-

openfire version 3.10.2

-

hazelcast plugin version 2.0 (hazelcast 3.4)

-

two server clustering, and now total session 5k

[error.log]

[debug.log]

“Failed to execute cluster task within 30 second” issue.

im guess this, hint from : www.javacreed.com/stopping-the-future-in-time/

edit source : org.jivesoftware.openfire.plugin.util.cache.ClusteredCacheFactory.java

check bold texts . please…

…

…

public Object doSynchronousClusterTask(ClusterTask task, byte[] nodeID) {

if (cluster == null) { return null; }

Member member = getMember(nodeID);

Object result = null;

// Check that the requested member was found

if (member != null) {

// Asynchronously execute the task on the target member

logger.debug("Executing DistributedTask: " + task.getClass().getName());

Future future = null;

try {

future = hazelcast.getExecutorService(HAZELCAST_EXECUTOR_SERVICE_NAME)

.submitToMember(new CallableTask(task), member);

result = future.get(MAX_CLUSTER_EXECUTION_TIME, TimeUnit.SECONDS);

logger.debug("DistributedTask result: " + (result == null ? “null” : result));

} catch (TimeoutException te) {

** if(future != null) future.cancel(true);**

** logger.error(“future is canceled, cause by TimeoutException”);**

logger.error(“Failed to execute cluster task within " + MAX_CLUSTER_EXECUTION_TIME + " seconds”, te);

} catch (Exception e) {

logger.error(“Failed to execute cluster task”, e);

}

} else {

String msg = MessageFormat.format(“Requested node {0} not found in cluster”, StringUtils.getString(nodeID));

logger.warn(msg);

throw new IllegalArgumentException(msg);

}

return result;

}

…

…

and see another method and edit : Collection doSynchronousClusterTask(ClusterTask task, boolean includeLocalMember)

Hi folks … I believe the timeout issue has been identified and will be resolved in the next release of the plugin (2.1.2). Refer to [OF-943] for more info.

i try to that source. (written by me - 2015. 9. 10)

but timeout error is not solve …

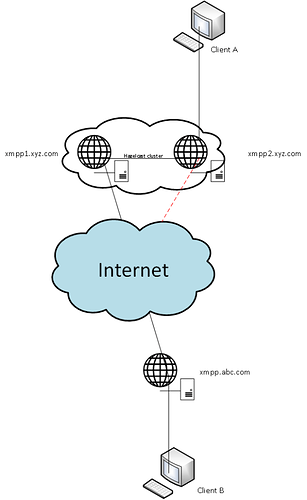

A principal question concerning of Hazelcast clustering system in Openfire: Should Clients A and B can chat to each other in cace illustrated in a figure below? Client B is connected to Node 2 but a connection to Internet from node B is down. But Internet connection from Node 1 is up and alive.

My problem is that if Client A sending messages to Client B, Client B cannot get them. Logs of xmpp2.xyz.com server say that it cannot get connection to xmpp.abz.com (no route).

Vice versa this case is working: if Client B is chatting to Client A: client 1 is getting all the messages. If Client A is connected to xmpp1.xyz.com server, the everything is working great in two-way.

Openfire: 3.10.3

Hazelcast cluster plugin: 2.1.2

Hi Juha -

Active S2S connections (for XMPP federation) are managed and shared within a cluster via the “Routing Servers Cache”.

In the scenario depicted, if Node 1 (xm.pp1.xyz.com) has previously established a connection to the abc.com domain, then all clients within the xyz.com domain should be able to reach abc.com by routing packets through this node in the cluster.

However, if no prior communication between the XMPP domains has been established, then Client A (connected to Node 2 in xyz.com) would be unable to initiate a new connection to Client B (abc.com) because there is no available route (which is what you observed in the log files).

Is this a typical scenario for your deployment? I might suggest that resolving the issues that cause Node 2 to experience limited network connectivity.

Regards,

Tom

Hi Tom,

big thanks for reply!

Ok. This is unfortunately my “default” scenario and environment. Have you good hints where to start and what kind of modifications for code should I do for doing this function that all the traffic is outgoing from Node 1 regardless which node a client is connected to?

BR, Juha

You might be able to write a custom plugin for this, but a connection manager might be a better solution than a cluster.

Hi Tom,

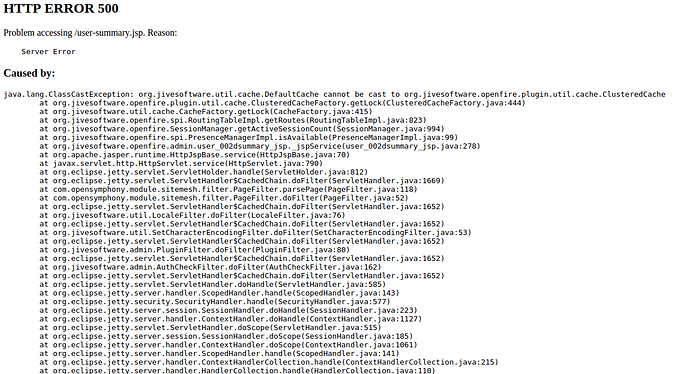

I have setup clustering on 2 node with following specification.

Java : 1.8.0_77 Oracle Corporation – Java HotSpot™ 64-Bit Server VM

openfire : 4.0.2

Hazelcast : 2.2.0

When i click on Users/Groups tab on admin console it throw below exception :

2016.05.03 06:37:30 org.jivesoftware.openfire.handler.IQHandler - Internal server error

java.lang.ClassCastException: org.jivesoftware.util.cache.DefaultCache cannot be cast to org.jivesoftware.openfire.plugin.util.cache.ClusteredCache

at org.jivesoftware.openfire.plugin.util.cache.ClusteredCacheFactory.getLock(Clust eredCacheFactory.java:444)

at org.jivesoftware.util.cache.CacheFactory.getLock(CacheFactory.java:415)

at org.jivesoftware.openfire.spi.RoutingTableImpl.addClientRoute(RoutingTableImpl. java:180)

at org.jivesoftware.openfire.SessionManager.addSession(SessionManager.java:588)

at org.jivesoftware.openfire.session.LocalClientSession.setAuthToken(LocalClientSe ssion.java:684)

at org.jivesoftware.openfire.handler.IQBindHandler.handleIQ(IQBindHandler.java:154 )

at org.jivesoftware.openfire.handler.IQHandler.process(IQHandler.java:66)

at org.jivesoftware.openfire.IQRouter.handle(IQRouter.java:372)

at org.jivesoftware.openfire.IQRouter.route(IQRouter.java:115)

at org.jivesoftware.openfire.spi.PacketRouterImpl.route(PacketRouterImpl.java:78)

at org.jivesoftware.openfire.net.StanzaHandler.processIQ(StanzaHandler.java:342)

at org.jivesoftware.openfire.net.ClientStanzaHandler.processIQ(ClientStanzaHandler .java:99)

at org.jivesoftware.openfire.net.StanzaHandler.process(StanzaHandler.java:307)

at org.jivesoftware.openfire.net.StanzaHandler.process(StanzaHandler.java:199)

at org.jivesoftware.openfire.nio.ConnectionHandler.messageReceived(ConnectionHandl er.java:181)

at org.apache.mina.core.filterchain.DefaultIoFilterChain$TailFilter.messageReceive d(DefaultIoFilterChain.java:690)

at org.apache.mina.core.filterchain.DefaultIoFilterChain.callNextMessageReceived(D efaultIoFilterChain.java:417)

at org.apache.mina.core.filterchain.DefaultIoFilterChain.access$1200(DefaultIoFilt erChain.java:47)

at org.apache.mina.core.filterchain.DefaultIoFilterChain$EntryImpl$1.messageReceiv ed(DefaultIoFilterChain.java:765)

at org.apache.mina.core.filterchain.IoFilterAdapter.messageReceived(IoFilterAdapte r.java:109)

at org.apache.mina.core.filterchain.DefaultIoFilterChain.callNextMessageReceived(D efaultIoFilterChain.java:417)

at org.apache.mina.core.filterchain.DefaultIoFilterChain.access$1200(DefaultIoFilt erChain.java:47)

at org.apache.mina.core.filterchain.DefaultIoFilterChain$EntryImpl$1.messageReceiv ed(DefaultIoFilterChain.java:765)

at org.apache.mina.filter.codec.ProtocolCodecFilter$ProtocolDecoderOutputImpl.flus h(ProtocolCodecFilter.java:407)

at org.apache.mina.filter.codec.ProtocolCodecFilter.messageReceived(ProtocolCodecF ilter.java:236)

at org.apache.mina.core.filterchain.DefaultIoFilterChain.callNextMessageReceived(D efaultIoFilterChain.java:417)

at org.apache.mina.core.filterchain.DefaultIoFilterChain.access$1200(DefaultIoFilt erChain.java:47)

at org.apache.mina.core.filterchain.DefaultIoFilterChain$EntryImpl$1.messageReceiv ed(DefaultIoFilterChain.java:765)

at org.apache.mina.core.filterchain.IoFilterEvent.fire(IoFilterEvent.java:74)

at org.apache.mina.core.session.IoEvent.run(IoEvent.java:63)

at org.apache.mina.filter.executor.OrderedThreadPoolExecutor$Worker.runTask(Ordere dThreadPoolExecutor.java:769)

at org.apache.mina.filter.executor.OrderedThreadPoolExecutor$Worker.runTasks(Order edThreadPoolExecutor.java:761)

at org.apache.mina.filter.executor.OrderedThreadPoolExecutor$Worker.run(OrderedThr eadPoolExecutor.java:703)

at java.lang.Thread.run(Thread.java:745)

I keep encountering this bug as well quite. It quite frustrating. It just happened again when deploying the monitoring plugin. to both nodes in the cluster.

There’s a more recent thread at https://community.igniterealtime.org/message/259162 which has no real resolution, but you may find something useful there.

Can the plugin support to save a Map like object?

Openfire does, yes. Have a look at

org.jivesoftware.util.cache.CacheFactory.createCache(String name)

It gives you a cluster-wide Map.

A slight gotcha to be aware of, there’s an open bug, https://issues.igniterealtime.org/browse/HZ-6 which means the max size property is not currently honoured, unless you manually edit the hazelcast config file in a clustered setup.

Thanks for your reply, now I am using ClusteredCacheFactory to cache

HashMap object, but every time the Cache.get method returns a different

object instead of the object I created before. the source code as follows:

…

Cache sendMapCache = CacheFactory.createCache(SEND_MAP_CACHE_NAME);

sendMapCache.setMaxLifetime(2 * JiveConstants.WEEK);

…

if (!sendMapCache.containsKey(sender)) {

sendMapCache.put(sender, new HashMap<String, Long>());

}

Map<String, Long> record = (Map<String, Long>)

sendMapCache.get(sender); //the address of the record is different

for the same user.

long now = new Date().getTime();

record.put(receiver, new Long(now));

System.out.println("The size: " + record.size());

…

Unfortunately the size of the record for every sender is alwasy 1, how

should I do? thanks a lot.

Yes, the semantics of the underlying Hazelcast IMAP (http://docs.hazelcast.org/docs/3.9.2/javadoc/com/hazelcast/core/IMap.html) mean that an object placed in to the map is always serialised, so when you retrieve a value by key you get a different object, albeit with the same underlying values…

You mean I can not use this way to do persistence or are there any better

ways to implement? thanks a lot.

I just looked a bit closer at the code you’ve written. Stuff is only serialised/persisted when it is placed in the Cache. So after calling record.put() you’ll need to call sendMapCache.put() to persist it.