I’m currently working on modifying Openfire 3_3_3 to broadcast certain messages to all available users. This works fine for broadcasts when Spark starts up, but after a little while Spark becomes unresponsive. I have varied my message size from small to large. The larger the message the faster Spark runs out of memory.

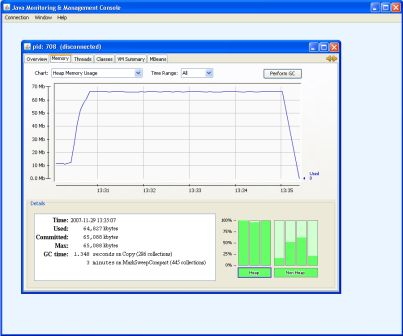

I have attached a screen shot of jconsole.exe running when Spark starts up. As you can see from the screen shot, the Heap Memory for Spark starts at 10MB but then quickly shoots up to the Max of 65MB.

I have tried to change the type of message from Broadcast to Chat.

I have also tried to send smaller amounts of information (100 bytes) per broadcast. But it turns out that it just takes slightly longer to run out of heap memory.

From my Openfire code I’m calling the broadcast method from the SessionManager object inside of a separate thread that I programmed from a Plugin.

Here is the run method for my Broadcast Thread.

public void run() {

continueBroadcast = true;

Message message = new Message();

message.setTo(“all”);

message.setFrom(“test”);

message.setType(Type.chat);

while(continueBroadcast){

String bodyMessage = “HelloWorld”;

message.setBody(bodyMessage );

try {

sessionManager.broadcast(message);

Thread.sleep(10);

}

catch (UnauthorizedException ue) {

System.out.println(“Error with broadcast”);

} catch (InterruptedException e) {

System.out.println(“Error with broadcast”);

e.printStackTrace();

}

}

}

Does anyone have a solution to prevent Spark from running out of memory? I haven’t tried looking into the spark code to make a fix. Does anyone know how to find the part of the code that is responsible for this memory problem?

Thank you for your help.

-Stuart