Hi,

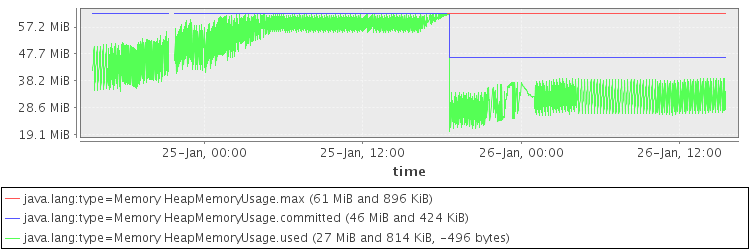

I’m having an issue where every couple days my Openfire server is running out of memory (java.lang.OutOfMemoryError: Java heap space) and I can’t figure out why.

Here’s my environment information:

Java Version: 1.6.0_14 Sun Microsystems Inc. – Java HotSpot™ 64-Bit Server VM

Appserver: jetty-6.1.x

OS/Hardware: Linux / amd64

Java Memory: 25.37 MB of 61.88 MB (41.0%) used

And the only two plugins I have installed are:

Search (1.4.3) - I didn’t install this, it just came with the normal install

java-monitor (1.0) - I installed this to help debug this particular issue, but it was happening without this.

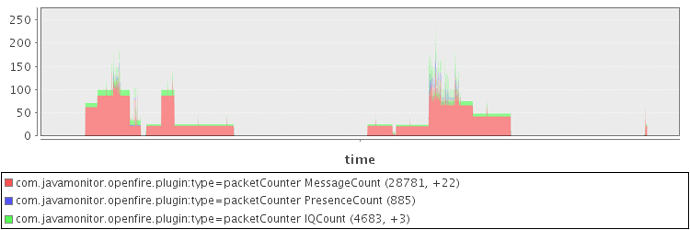

I know the java memory is somewhat low, but I have very light usage (max of like 10 or so concurrent users, pretty low stanza communication) and I’ve tried increasing it, which makes the frequency of the crashes lower, but doesn’t stop the root, unchecked inflation of memory.

I’ve also been looking at other threads and I tried some settings from those:

cache.username2roster.maxLifetime = 419430400

xmpp.pep.enabled = false

But they didn’t seem to help, ergo me starting a new discussion.

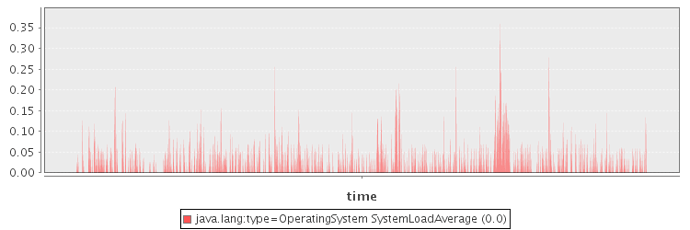

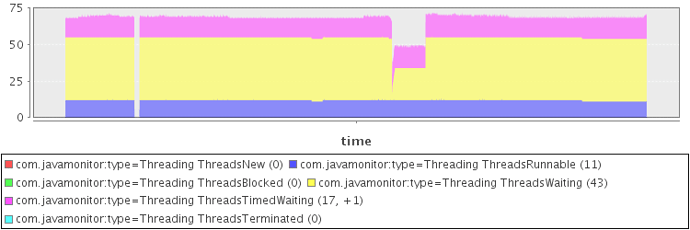

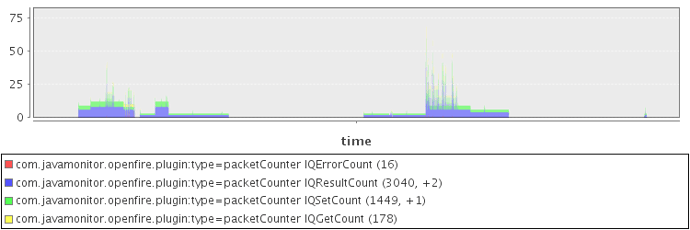

I’m not sure what to look at or try next. As stated above I have the java-monitor plugin running so I have data from that (not sure how to save a graph and post, or which one to save) but from what I can see it just looks like the heap memory usage starts increasing and doesn’t stop.

Any pointers would be greatly appreciated, including any debugging steps I can take to get additional information. As I said it will crash again in a day or so, so the turnaround for trying something isn’t too high.

Thanks,

\Peter