Hey.

I’ve seen a question asked about upgrading Java in Spark to version 11, and I understand that this will take a lot of effort.

What about upgrading to OpenJDK8?

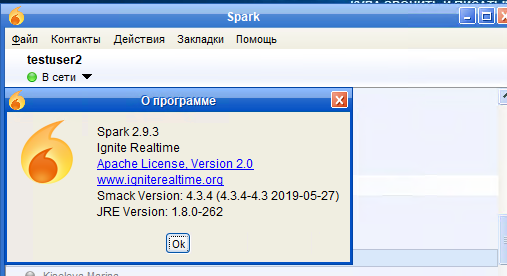

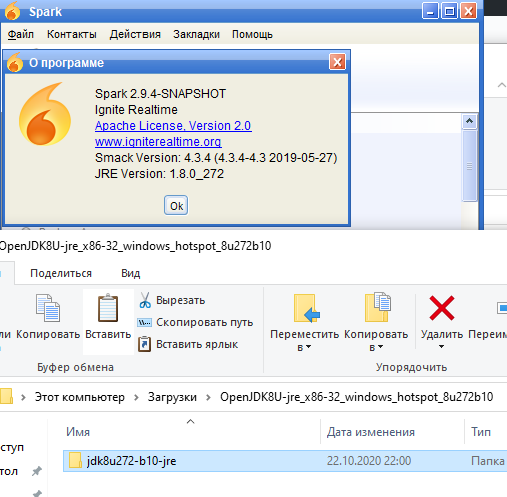

I replaced in the C: \ Program Files (x86) \ Spark \ jre folder

on the latest version of OpenJDK. Everything works, SSO, chats and I didn’t get any errors in the Spark logs.

Actually there were talks to stop bundling both Openfire and Spark with Java. To make it easier to build and support less installers and don’t bother with licenses. Of course, then admins and users will have to install their own Java to run server and clients. So far there were no strict plans to when to do this and whether to do this. https://igniterealtime.atlassian.net/browse/SPARK-2126

So, i’m not sure if it is worth spending time switching to OpenJDK. I’m guessing this should be possible, because OpenJDK is available in install4j, which is used for installers here. It is not as simple as adding a folder. Becaue JRE is not in the source code. It is added to installers during the build process in Bamboo (our CI system). I don’t know much about Bamboo though. I have tried to look into it a few times, but it is just too complex for me  Usually @akrherz or @guus work with it and configure it.

Usually @akrherz or @guus work with it and configure it.

I have also used Spark with AdoptOpenJDK8 (another flavor of OpenJDK) and it seems to work fine. Spark even runs with OpenJDK11 on Linux, but many plugins fail to work. I was not able to run it with 11 on Windows.

wroot, thanks for the quick response. I think that it is not necessary to unbind Java and Spark. It’s very convenient because you don’t need to install Java separately.

I think that updating Java to the latest version will be useful because the updated CA and Root CA certificates will be in the certificate store.

As well as bug fixes and security improvements

The license issues are painful, but aside from that, we don’t want to be in the business of distributing JREs and implying that we will make future releases just to supply updated JREs. If volunteers step up to support that, then sure, otherwise I am very much against this.

What you expect from volunteers? Maybe adding updated JREs to build scripts? I can do this for Spark, if someone teaches me

I’ve been using Eclipse OpenJ9 JRE8 with no problem at all with Spark 2.9.3, just FYI.

As Spark is currently using Hotspot variant (Oracle’s original) i would say it is still safer to use this instead of OpenJ9. I had experience with other apps when they were not working correctly with this variant, if they were designed to work with Hotspot.