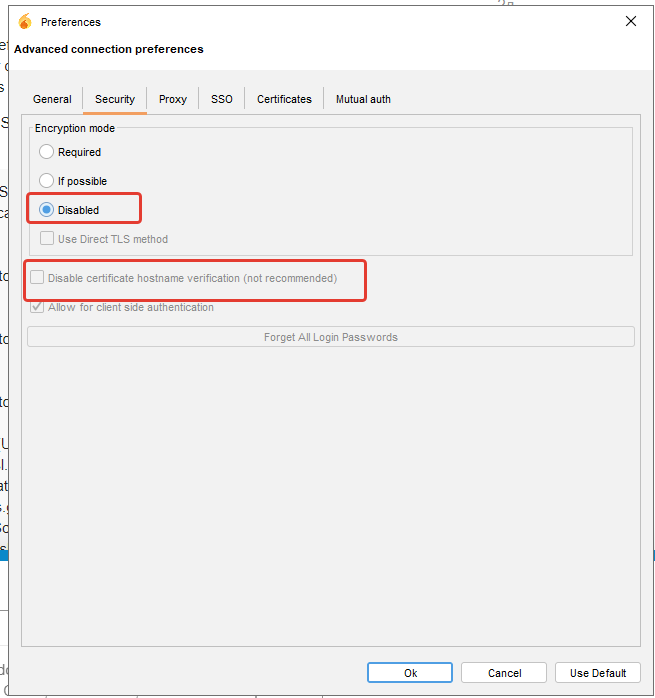

I like spark as a client. I prefer it over other XMPP clients out there. I am trying to use it with IceWarp, which uses XMPP for their chat feature. When I try to log into the server I get a “Certificate path validation failed” error. “this cert must be the last cert in the certification path”

I can login with Gajim and Swift, so the server seems to be working. Anyone have any ideas on how to correct this?

org.jivesoftware.smack.SmackException: javax.net.ssl.SSLHandshakeException: java.security.cert.CertificateException: java.security.cert.CertPathValidatorException: Certificate path validation failed

at org.jivesoftware.smack.tcp.XMPPTCPConnection$PacketReader.parsePackets(XMPPTCPConnection.java:1176)

at org.jivesoftware.smack.tcp.XMPPTCPConnection$PacketReader.access$1000(XMPPTCPConnection.java:1092)

at org.jivesoftware.smack.tcp.XMPPTCPConnection$PacketReader$1.run(XMPPTCPConnection.java:1112)

at java.lang.Thread.run(Unknown Source)

Caused by: javax.net.ssl.SSLHandshakeException: java.security.cert.CertificateException: java.security.cert.CertPathValidatorException: Certificate path validation failed

at sun.security.ssl.Alerts.getSSLException(Unknown Source)

at sun.security.ssl.SSLSocketImpl.fatal(Unknown Source)

at sun.security.ssl.Handshaker.fatalSE(Unknown Source)

at sun.security.ssl.Handshaker.fatalSE(Unknown Source)

at sun.security.ssl.ClientHandshaker.serverCertificate(Unknown Source)

at sun.security.ssl.ClientHandshaker.processMessage(Unknown Source)

at sun.security.ssl.Handshaker.processLoop(Unknown Source)

at sun.security.ssl.Handshaker.process_record(Unknown Source)

at sun.security.ssl.SSLSocketImpl.readRecord(Unknown Source)

at sun.security.ssl.SSLSocketImpl.performInitialHandshake(Unknown Source)

at sun.security.ssl.SSLSocketImpl.startHandshake(Unknown Source)

at sun.security.ssl.SSLSocketImpl.startHandshake(Unknown Source)

at org.jivesoftware.smack.tcp.XMPPTCPConnection.proceedTLSReceived(XMPPTCPConnection.java:856)

at org.jivesoftware.smack.tcp.XMPPTCPConnection.access$2000(XMPPTCPConnection.java:155)

at org.jivesoftware.smack.tcp.XMPPTCPConnection$PacketReader.parsePackets(XMPPTCPConnection.java:1171)

… 3 more

Caused by: java.security.cert.CertificateException: java.security.cert.CertPathValidatorException: Certificate path validation failed

at org.jivesoftware.sparkimpl.certificates.SparkTrustManager.checkServerTrusted(SparkTrustManager.java:97)

at sun.security.ssl.AbstractTrustManagerWrapper.checkServerTrusted(Unknown Source)

… 14 more

Caused by: java.security.cert.CertPathValidatorException: Certificate path validation failed

at org.jivesoftware.sparkimpl.certificates.SparkTrustManager.doTheChecks(SparkTrustManager.java:127)

at org.jivesoftware.sparkimpl.certificates.SparkTrustManager.checkServerTrusted(SparkTrustManager.java:93)

… 15 more

Caused by: java.security.cert.CertPathValidatorException: basic constraints check failed: pathLenConstraint violated - this cert must be the last cert in the certification path

at sun.security.provider.certpath.PKIXMasterCertPathValidator.validate(Unknown Source)

at sun.security.provider.certpath.PKIXCertPathValidator.validate(Unknown Source)

at sun.security.provider.certpath.PKIXCertPathValidator.validate(Unknown Source)

at sun.security.provider.certpath.PKIXCertPathValidator.engineValidate(Unknown Source)

at java.security.cert.CertPathValidator.validate(Unknown Source)

at org.jivesoftware.sparkimpl.certificates.SparkTrustManager.validatePath(SparkTrustManager.java:270)

at org.jivesoftware.sparkimpl.certificates.SparkTrustManager.doTheChecks(SparkTrustManager.java:123)

… 16 more

Caused by: java.security.cert.CertPathValidatorException: basic constraints check failed: pathLenConstraint violated - this cert must be the last cert in the certification path

at sun.security.provider.certpath.ConstraintsChecker.checkBasicConstraints(Unknown Source)

at sun.security.provider.certpath.ConstraintsChecker.check(Unknown Source)

… 23 more