I was using Spark 2.7.5 without any issues. Uninstalled 2.7.5 and then installed 2.7.7.

Install seems to work without any issues, but when I try to open Spark it just sits there. Looking in the error.log I see this:

javax.net.ssl.SSLHandshakeException: java.security.cert.CertificateException: Certificates does not conform to algorithm constraints

at sun.security.ssl.Alerts.getSSLException(Unknown Source)

at sun.security.ssl.SSLSocketImpl.fatal(Unknown Source)

at sun.security.ssl.Handshaker.fatalSE(Unknown Source)

at sun.security.ssl.Handshaker.fatalSE(Unknown Source)

at sun.security.ssl.ClientHandshaker.serverCertificate(Unknown Source)

at sun.security.ssl.ClientHandshaker.processMessage(Unknown Source)

at sun.security.ssl.Handshaker.processLoop(Unknown Source)

at sun.security.ssl.Handshaker.process_record(Unknown Source)

at sun.security.ssl.SSLSocketImpl.readRecord(Unknown Source)

at sun.security.ssl.SSLSocketImpl.performInitialHandshake(Unknown Source)

at sun.security.ssl.SSLSocketImpl.startHandshake(Unknown Source)

at sun.security.ssl.SSLSocketImpl.startHandshake(Unknown Source)

at org.jivesoftware.smack.XMPPConnection.proceedTLSReceived(XMPPConnection.java:87 1)

at org.jivesoftware.smack.PacketReader.parsePackets(PacketReader.java:258)

at org.jivesoftware.smack.PacketReader.access$000(PacketReader.java:46)

at org.jivesoftware.smack.PacketReader$1.run(PacketReader.java:72)

Caused by: java.security.cert.CertificateException: Certificates does not conform to algorithm constraints

at sun.security.ssl.AbstractTrustManagerWrapper.checkAlgorithmConstraints(Unknown Source)

at sun.security.ssl.AbstractTrustManagerWrapper.checkAdditionalTrust(Unknown Source)

at sun.security.ssl.AbstractTrustManagerWrapper.checkServerTrusted(Unknown Source)

… 12 more

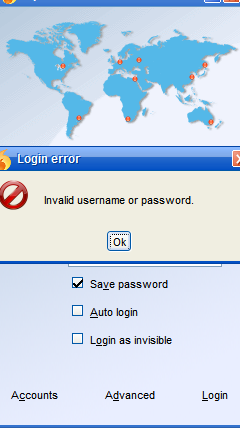

Can anyone help?